Data center leaders always look for ways to improve performance, efficiency, and speed. These innovations drive the growth of the colocation industry.

One of the data center examples is carrier-neutral data centers. These facilities allow customers to use any fiber provider they want to connect with their servers in the data center.

1. Core & Pod Design

The data center core is a collection of common building blocks that support different applications. These building blocks have varying hardware redundancy and quality of components, but each is designed to meet the specific application requirements. The building block is then connected to a data center fabric that distributes data between the pods. This approach allows the architecture to evolve without requiring changes in the building block (e.g., hardware), reducing the risk of expensive and time-consuming rip-and-replace efforts.

Pod and core architectures also reduce the need for complex integration and systems management between disparate infrastructure systems. For example, many vendors offer engineered racks of equipment—sometimes called a data center “pod”—that combine computing, storage, and network hardware into a single unit.

With a pod-and-core design, IT teams can focus on assessing the specific performance needs of their primary application and designing solutions that address those needs. This eliminates the Frankenstein method of piecing together multiple disparate infrastructure systems, and it can result in significant financial and operational benefits.

Another example is Facebook’s Altoona design, which offers a unique and flexible way to address massive scale workloads. Although the design is Facebook-specific, it can provide valuable insight into how to address future massive-scale deployments in a highly reliable and cost-effective way.

2. Virtualization

Data centers host a large quantity of sensitive information for both internal purposes and customers. Various technologies improve data storage capabilities and reduce access time, while staff efficiency is enhanced with software-defined infrastructure. These solutions also make implementing changes, updating systems, and applying security patches easy.

Virtualized environments are not bound by the physical capacity limits of the underlying hardware and, therefore, can host more applications on the same server. As a result, the number of servers, racks, and cooling equipment can be significantly reduced, cutting down on costs and maintenance.

In addition, virtualization improves testing capabilities by creating snapshots of the environment that can be rolled back to a previous version in the event of a software error. In turn, this helps to cut down on expensive hardware upgrades and improve energy efficiency.

Keep tabs on all aspects of your data center to increase operational efficiency. Monitoring enables better management and optimization of critical parameters like power usage effectiveness (PUE). By tracking these factors regularly, it’s possible to predict future needs for space, power, and cooling and optimize resource allocation accordingly.

Nlyte is your source of truth, combining the data from facilities, IT, and business systems to deliver performance and capacity insights across your entire operation. Its what-if models forecast the exact capacity impact of upcoming projects on your facility, energy, and networks.

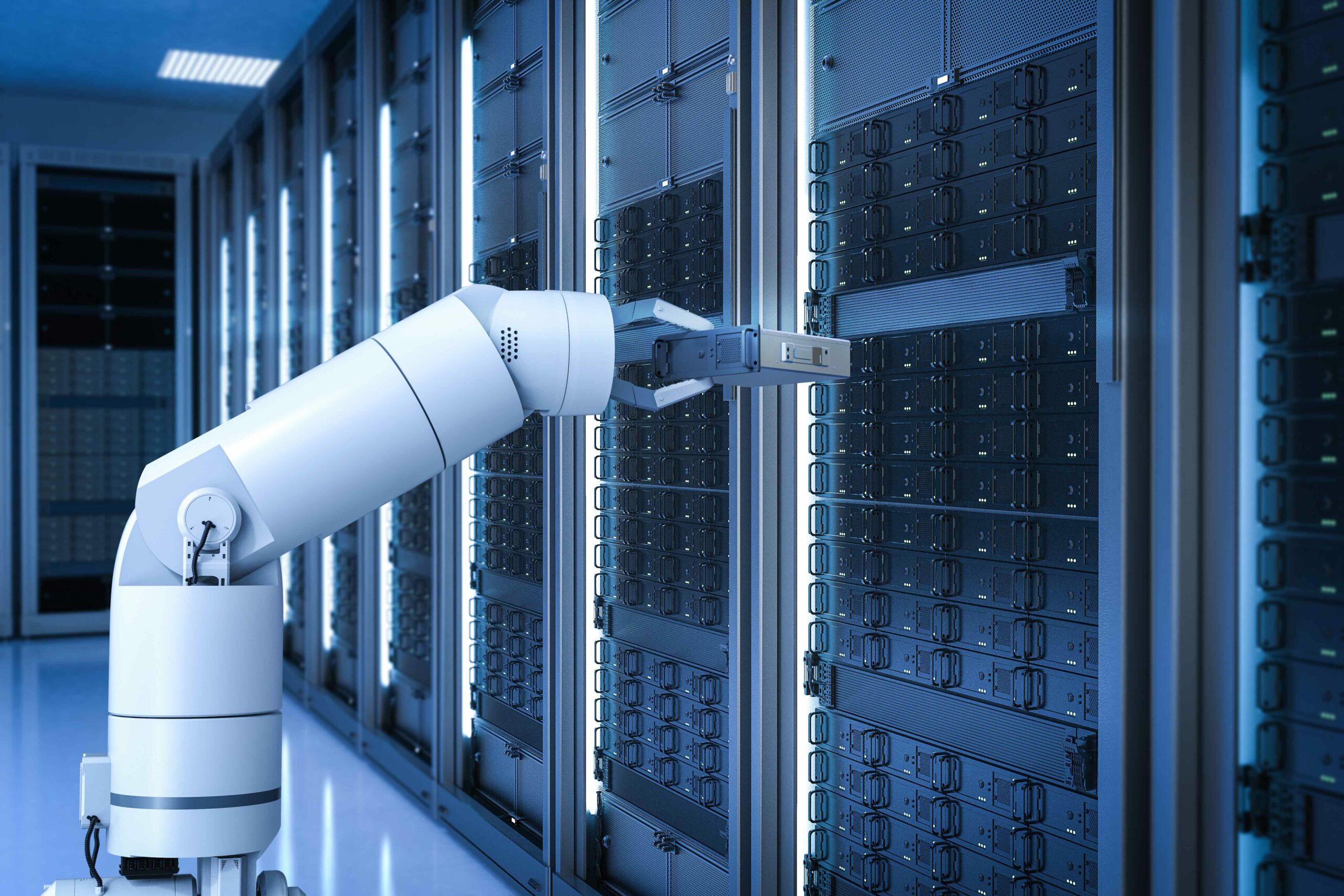

3. Self-Healing Infrastructure

A data center can look like a scene from The Matrix, with rows of servers, cooling towers, and an absurd amount of network cables. However, keeping uptime as close to 100% as possible requires more than just basic redundancy.

As IT teams collect troves of data on system and process performance, automation solutions such as AI for IT operations (AIOps) can help detect trends and patterns. Then, these solutions can automatically provision and de-provision resources to minimize outages and performance degradation.

Self-healing infrastructure takes the concept of network automation even further, detecting and resolving problems without human intervention. The result is a dependable and resilient network that improves the availability of business applications and systems and reduces operational costs.

To achieve this level of self-healing functionality, it’s important to understand the four core components of a self-healing network: detect, predict, remediate, and prevent. Detection refers to identifying issues and their source based on anomalies, threshold breaches, escalation levels, and other metrics. Predictive analytics uses historical and real-time network telemetry to identify potential issues like hardware failure or application performance degradation.

Finally, remediation consists of automated responses to alerts, such as escalation to support agents and the execution of scripts to resolve incidents and prevent future incidents. This level of automation can drastically reduce the time IT staff must spend on repetitive manual troubleshooting and free them up to focus on high-value activities.

4. Hybrid Cloud

The hybrid cloud model offers many benefits for businesses. It gives organizations flexibility on where they can deploy data and workloads, which speeds up IT response to changing requirements and strengthens digital business initiatives.

It also helps organizations manage costs by separating capital expenses from variable and operational costs. It can also reduce the risk of relying on a single vendor, as a hybrid solution allows companies to combine public and private clouds.

The key to a successful hybrid cloud environment is ensuring all applications and workloads have access to the best resources available. This requires a network that seamlessly connects onsite and offsite environments, such as on-premises data centers and third-party public clouds. This can be achieved using a combination of data virtualization and connecting tools.